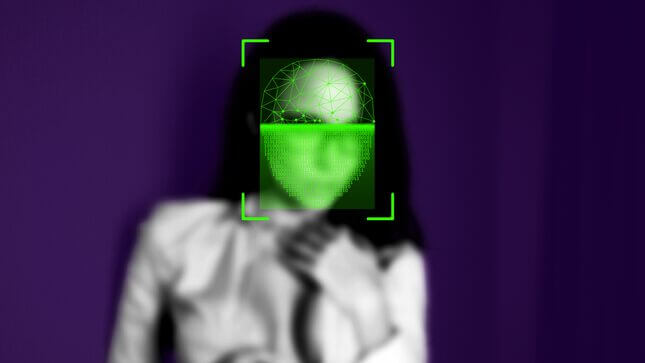

‘Deepfake Porn’ Is Getting Easier and Easier to Make

What does it mean for consent as deepfakes become increasingly accessible?

In Depth

Graphic: Elena Scotti (Photos: Shutterstock)

Researcher Henry Ajder has spent the last three years monitoring the landscape of deepfakes, where artificial intelligence is used to swap people’s faces into videos. He spends a lot of time “going into the dark corners of the internet,” as he puts it, in hopes of keeping tabs on the malicious uses of synthetic media. Ajder has seen a lot of disturbing things, but a few months ago, he came across something he’d never seen before. It was a site that allowed users to simply upload a photo of someone’s face and produce a high-fidelity pornographic video, seemingly a digital reproduction of that person. “That is really, really concerting,” he says.

Ajder alerted the journalist Karen Hao, an artificial intelligence researcher. Last month, she wrote about the site in the MIT Technology Review, bringing attention to the specter of free, easily-created deepfake porn. “[T]he tag line boldly proclaims the purpose: turn anyone into a porn star by using deepfake technology to swap the person’s face into an adult video,” wrote Hao. “All it requires is the picture and the push of a button.” The next day, following an influx of media attention, the site was taken down without explanation.

But the site’s existence shows how easy and accessible the technology has become.

Experts have warned that deepfakes could be used to spread fake news, undermine democracy, influence elections, and cause political unrest. While deepfakes of Russian president Vladimir Putin and North Korean leader Kim Jong-un have been produced—ironically, for political ads underscoring threats to American democracy—the most common application is porn, and the most common target is women. Deepfakes first stepped onto the public stage via videos mapping celebrities’ faces onto porn performers’ bodies and have since impacted non-celebrities, sometimes as a doctored version of “revenge porn,” where, say, exes spread digitally created sex tapes that can be practically indecipherable from the real thing. Resources like the website Ajder discovered make sexualized deepfakes infinitely easier to produce and, even when individual domains are taken down, the technology lives on.

Jezebel spoke with Ajder about the implications of what he calls “deepfake image abuse,” and the complex challenges in addressing this genre of nonconsensual porn. Our conversation has been edited for clarity.

JEZEBEL: You prefer to use the phrase “deepfake image abuse” instead of “deepfake porn,” can you explain?

HENRY AJDER: Deepfake pornography is the established phrase to refer to the use of face-swapping tools or algorithms that strip images of women to remove their clothes, and it comes from Reddit, where the term first emerged exclusively in this context of nonconsensual sexual image abuse. The term itself, deepfake pornography, seems to imply, as we think of with pornography, that there is a consensual element. It obscures that this is actually a crime, this is form of digital sexual harassment, and it doesn’t accurately reflect what is really going on, which is a form of image abuse.

this is form of digital sexual harassment

In laymen’s terms, how is deepfake image abuse created?

-

-

-

-

-

-

-

-

-

-

-

-

-

-

-

-

-

-

-

-

-

-

-

-

-

-

-

-

-

-

-

-

-

-

-

-

-

-

-

-